|

Jinmyeong Kim I'm received my M.S. degree under the supervision of Professor Sung-Bae Cho at SoftComputing Lab, Yonsei University in Seoul, South Korea. My research interest lies in video understanding and multimodal deep learning. Recently, I am specifically interested in:

Currently, I'm actively searching for a research laboratory to join for my Ph.D. program. |

|

News |

| 🎉 2025.08 : Received a Master of Engineering in Computer Science from Yonsei University. |

| 🎉 2025.06 : One paper on Mitigating Hallucination of Visual Language Model is accepted at ICCV 2025! |

| 🎉 2025.04 : One paper on Unsupervised Video Anomaly Detection is accepted at Pattern Recognition 2025! |

ResearchI'm interested in computer vision, deep learning, generative AI, image processing and multi modal deep learning. |

|

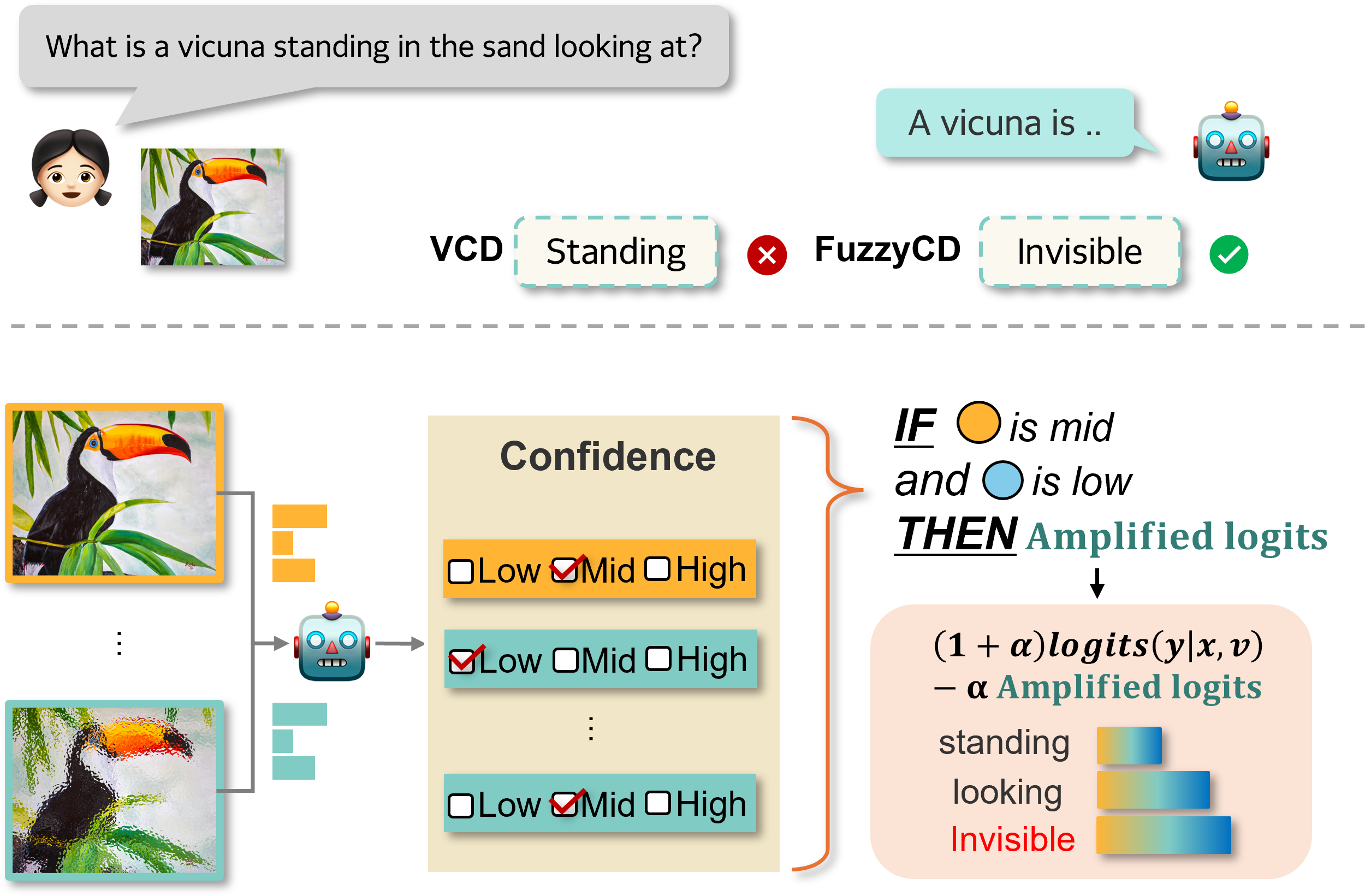

Fuzzy Contrastive Decoding to Alleviate Object Hallucination in Large Vision-Language Models

Jieun Kim, Jinmyeong Kim, Yoonji Kim, Sung-Bae Cho ICCV, 2025 page / paper |

|

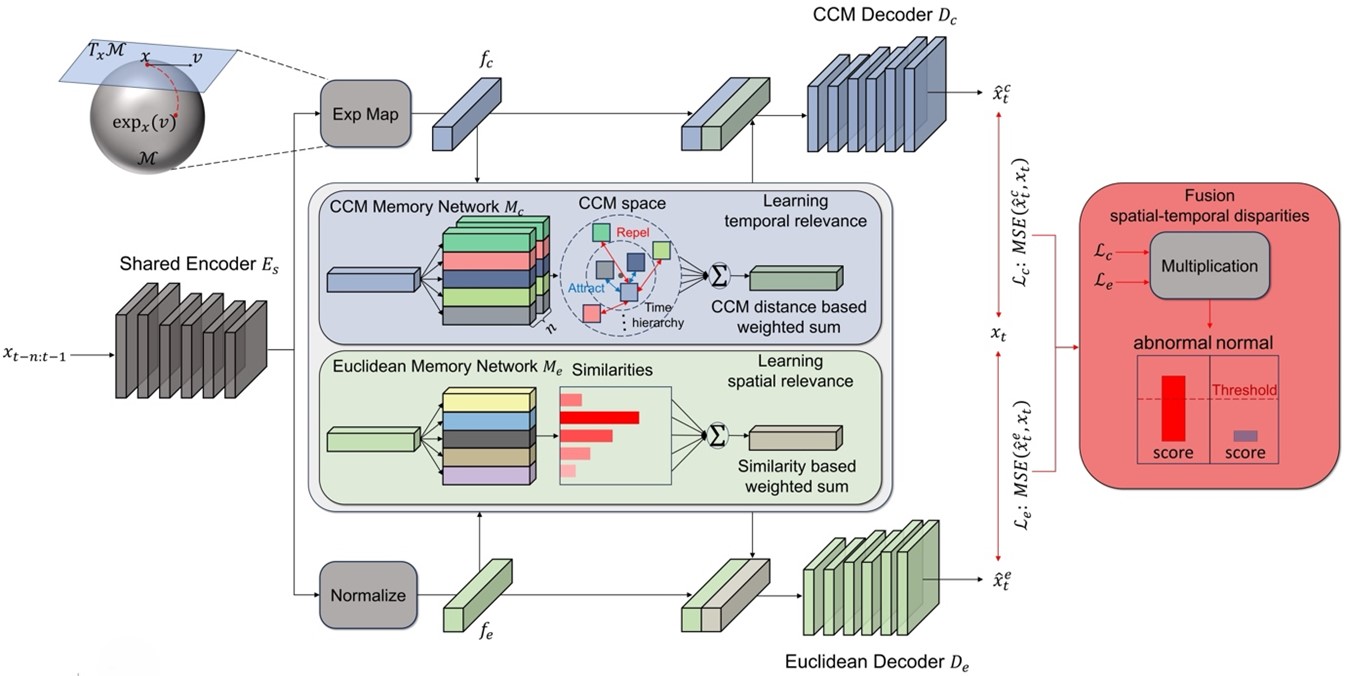

Unsupervised Video Anomaly Detection by Memory Network with Autoencoders in Euclidean and Non-Euclidean Spaces

Jinmyeong Kim, Sung-Bae Cho Pattern Recognition, 2025 (IF: 7.5) paper |

|

Design and source code from Jon Barron. |